This volume of the 50-part book series continues to follow our crew of 8 gay men vacationing on Fire Island, every year, for 50 years, tracing out the the last 5 decades of gay history. This is a video synopsis of the chapter, along with gay cultural milestones, and anecdotes of the year. And, it is completely generated by AI, from the images, the 50-volume arc, the text, synopses, the anecdotes, even the programs which wrote the accompanying 350-page volume. Only this posting, and the background music is Human-generated. It has lots of pretty images of half-naked men at the beach, which to me is exactly what gay men should be enjoying in the summer, instead of peering at their phone to see the latest output of Taylor Swift. Get a life! Get laid!

This volume was generated with a target style of William Burroughs, which it achieves fairly easily. Burroughs pioneered what he called the “Cut-Up”, which involves writing a text, then cutting it physically into fragments and re-assembling them to try to make sense, which conceptually congruent to how a Large Language Model (LLM) AI constructs its own texts. You’ll notice in stark contrast to how most gpt-4o output (Chat Got) the sentences are brief, choppy, and usually fairly stark.

"1977 - The gutters screamed with leather, with whips and chains dripping inferno sweat. The virgin birth of 'Drummer' magazine, pulsing under the underground, lit the torch of the darker alleyways. Pages whisper secrets, a subculture dawns amidst the smoke, gleaming black leather salvation.”

It also sounds like Allan Ginzberg, (volume 10) and some others of his era, The cut-up style also an example of Aleatory Art, or in musical terms (Brian Eno) Ambient Music, stochastic, random, sampled. I always enjoyed the fragmentary nature, and my first explorations at generating books with LLM’s was quite fragmentary and strange. This was pure 60’s avant-gard. I studied Aleatory Music when I was around 15 or 16 (my poor teachers) since I had plowed through most musical centuries mastering 6 instruments, and I became fascinated with the 60’s. A wind chime is an example of aleatory music, like these texts - it has a rule set, and then tinkles through in invariant chord, always different always the same. Aeolian Harps do the same, wind whistling creating chords. The door in my office is an Aeolian Harp here in San Francisco, about 5-6pm as the wind whips down Twin Peaks and makes woo-woo noises while (I think) Ravens peck on the roof - they're comical. I hate wind chimes.

The reunion buzzed with electric excitement. Each arrival brought new stories from the city, gossip that tumbled out amidst gritty laughs and twisted clasps of welcome. The cove, our refuge from the grind, vibrated with the pulse of something spectacular, something raw, about to unfold.

And then, in the mad scramble of our bliss, came the resolution to a riddle we'd been dragging through the slush and cold – the enigmatic vanishing of Sparkle, the disco-crooning parrot. Turned out, she had landed herself a primo gig for the summer, echoing numbers at the subterranean drag bingo.

Jamal's laughter cracked through the smoky haze, his eyes glinting like shards of broken glass.

“I found her right when she squawked out ‘B-11’ in perfect sync with the drag queen caller!”

Definitive chaos, raw and pure. On a hunch, he had drifted to the bingo, whispers of a feathered diva swirling in his mind like phantom radio frequencies. The scene was quintessential Sparkle, a twisted vision of flamboyant delirium anchoring the chaos. Sparkle – always a splinter in the mundane.

The second part of this summary was the visual accompaniment, which highlights the limitation of images in the current version of these AI systems. You may or may not have seen consternation when asking for an image of a “pre-revolutionary general” and the OpenAi example image coming up with someone who clearly wasn’t a White Male European, clearly an anachrony.

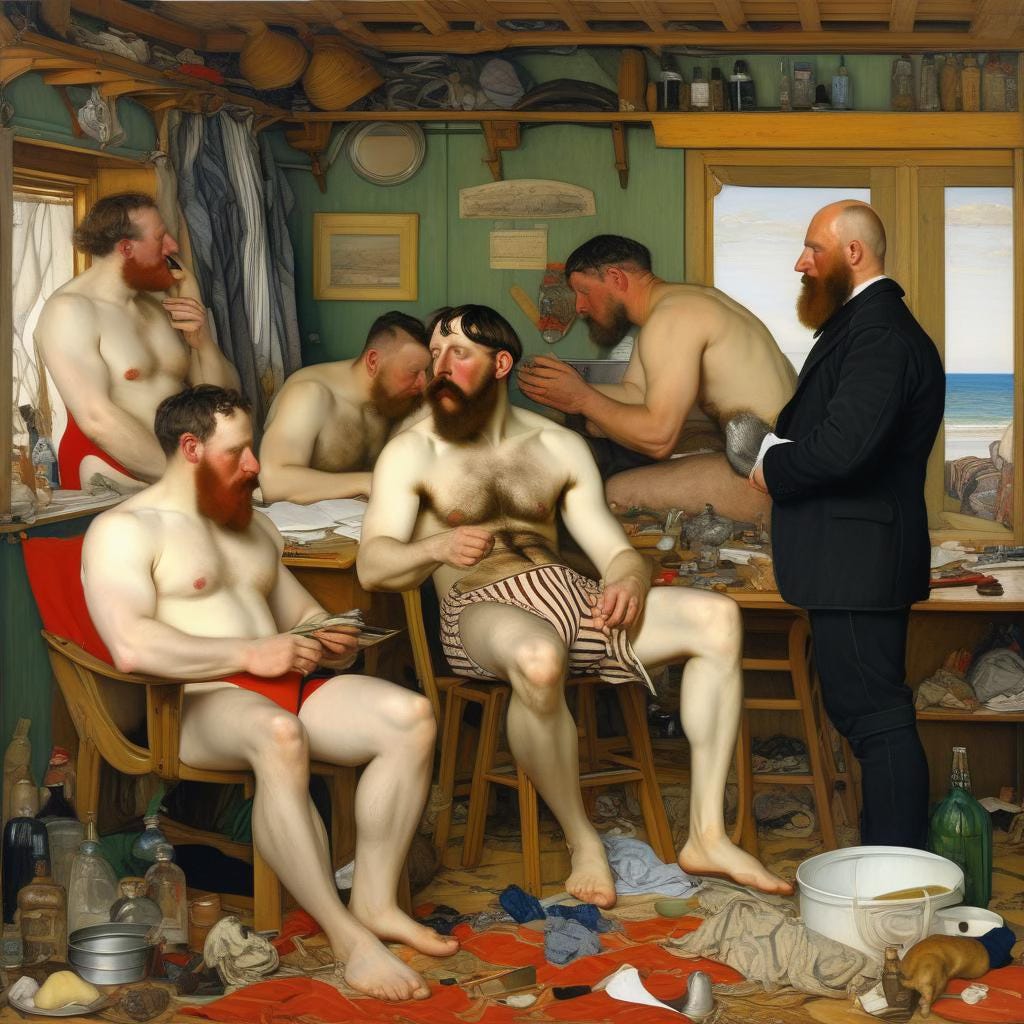

Like the textual generation, the visual AI tools (I use several, but for consistency sake I’ve used Stable Diffusion here), there’s a harsh problem of history. Our target style for this summary is the pre-Raphaelite painter Ford Madox Brown. What I get from the image styling are lovely images of very ordinary looking European Male bodies, this scene is where they’re making disco costumes:

Creamy white skin, red hair, some body hair (more later: extremely difficult), large beards, very late-19th century looking. However, the visual summary called out several African-American characters, which never appeared in the output. I had examine the process of generating images in detail for some time, and used all sorts of tricks to emphasize black characters, increase the probability of their presence in the output, attempt to force the result, to no avail generally. If the artist didn’t have Asian, Black, or other visual groups in their repertoire, it was a low-to-zero chance of them appearing in the synthesized output for that style.

European Bias: Were you to read on Black representation in art, the subject pops up over and over and over, you don’t see Black people frequently (or at all) in art in major museums, in European art collections, in the study of art because… what is studied or conserved is made by White European people who painted the people around them, other White European people. It's dead simple of course, and is the source of what I’ll call a type of significant bias in many image generators, Stable Diffusion being one example. The situation of course is irrefutable, and evidence simple, using art up to the 20th century as a style basis, you’ll generally not get Black or Asian images with a few exceptions. In the prior volume (1) I used Tom of Finland as the style target, and while not frequent, there were enough examples of Black men in his work I didn’t have to force it. As i noted, Tom of Finland rarely if ever had night scenes, so those, no matter how forcefully I insisted, never emerged.

Non-European Bias - Smoothness: To the despair of people working with image generators and males, for reasons I only slowly grasped, European model male body hair is an unusual proposition to say the least. One of the best image generators on the market - Midjourney - finds it impossible to generate men with any body hair whatsoever. In experiments, it took a few thousand iterations of prompts to get even modest body hair. With Stable Diffusion, I had to use my image library of men (a few hundred thousand digital images) to support construction of a “LORA”, a special auxiliary training file which would provide examples of men with chest hair to the AI. Otherwise, smooth bodies more representative of Non-European, Non-Mediterranean men are the usual output. But not just men.

Female Bias - Breasts and Bras: The final odd bias is that if a chest is the result of a generation, even with a thick pelt, image generation systems find it’s statistically more suitable to put a bra or some suitable covering for the chest. So, in the world of muscular, and non-muscular men, image generators faced with an expanse of chest all the time put bras on the chest, usually providing comical but irritating results. I would hypothesize that it is because: most AI researchers are heterosexual men, and their work is with female images they enjoy; there are more images of women in art, and photography than men, so training sets are biased towards female representation and; There is a bias against nipples in generation which tends to push the outputs to have some sort of nipple cover no matter what.

I am a gay man, so when I manipulate images for fun, I’m interested in male images so what interests the teams makes sense to me. It also shows that for commercial products, there is a strong likelihood of what men like being more representative in the samples generated than what women would enjoy. I’m not sure, that’s very conjectural, but would be interesting to pursue.

So for the gauzy, pre-Raphaelite look of Ford Madox Brown, enjoy the brief video and the gay anecdotes for 1977. Red haired semi-naked men are a plus in my book, sometimes with body hair, disporting on the beach, in the disco, and lending a quaint air in contrast to the quite aggressive, almost menacing text form Burroughs.

More on quaint next volume.

2) Summer Fluff: Burroughs/Madox Brown